Mobile Mapping System

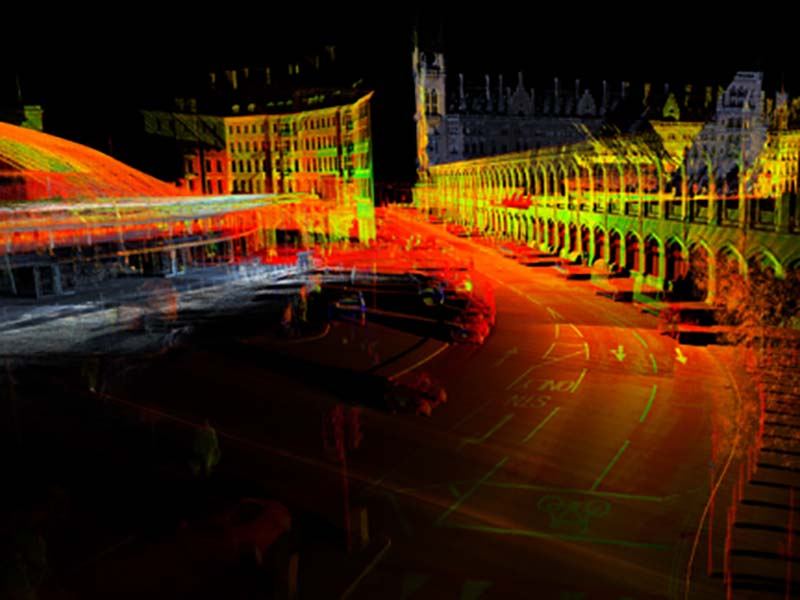

Getmapping Plc operates multiple Pegasus:Two Ultimate mobile mapping systems (MMSs). This survey was conducted using a Pegasus:Two Ultimate MMS (Figure 1) and a Pegasus:Two upgraded to the Ultimate version. The cameras of the Ultimate have a high dynamic range thanks to a large sensor-to-pixel ratio and a dual-light sensor. The camera’s high dynamic range enables crisp images to be captured in a variety of lighting conditions and at various vehicle speeds. The image quality is further improved by the camera sensor resolution of 12MP. The onboard JPEG compression allows massive amounts of images to be stored on the removable drive on the spot without compromising on image quality. Data can be saved directly and connected seamlessly to any PC or server with a USB 3.0 interface. Compression is a prerequisite for prolonged surveys without interruptions since the camera produces imagery with a three times higher resolution compared to standard systems. The side cameras capture eight frames per second (FPS) at a field of view of 61 x 47 degrees. The maximum ground sample distance (GSD) at a distance of 10m from the camera is 3mm. The fish-eye camera system, which consists of two cameras mounted back to back, provides seamless 24MP imagery with a 360-degree field of view. The dual fish-eye camera system is aligned with the laser scanner, enabling colourization of the laser point clouds (Figures 2 and 3).

Survey

The MMS capture of London’s boroughs was conducted in two steps. In the first step, six boroughs were surveyed in autumn 2017. Three surveyors drove approximately 50km per day to capture 25km of road trajectories, usually in back-and-forth pass. The survey continued throughout the winter, even when light conditions were poor. No surveyors or traffic management crews were needed on the street – all data was captured from the vehicle. The captured images and laser point clouds were subsequently converted into maps during the first six months of 2018. Two office-based operators processed the data and stored it on hard disks using Leica Pegasus:Manager, which leverages the latest system calibration methodologies to precisely overlay imagery and point cloud data.

Mapping

The points, symbols, polygons and point features making up the parking zones were extracted manually and in a (semi-)automated manner from the point clouds and 360-degree fish-eye imagery lines using Leica’s MapFactory which is embedded into Esri ArcMap. The extracted data can be easily imported into Esri solutions or other GIS platforms for further processing and usage. The information on parking signs was extracted from the imagery and attributed to the relevant parking zone. The outlines of each parking zone were represented by polygons. Wherever outlines were occluded (i.e. visibility was obstructed by cars or other objects in the line of sight), the polygons were interactively collected and refined. For example, the visible lines were extended and intersected with each other to obtain the coordinates of invisible corner points of parking zones.

Results and Challenges

Concluding Remarks

The imagery and point clouds can also be used for asset management, drainage projects, road safety improvements, highway maintenance, 5G telecoms and environmental analysis. Based on all the collected data and extracted features, AppyWay is able to deliver highly accurate and detailed traffic management data to its smart parking systems.